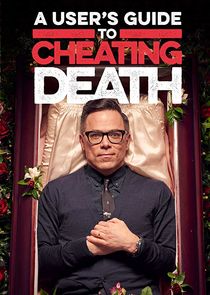

A User's Guide to Cheating Death S01E05 - Au Natural - Turning Our Back to Modern Medicine

Sinopse

"Let your food be your medicine and your medicine be your food", said the famous Greek physician, Hippocrates. Unfortunately, that was spoken before most of our medical understanding. As a society, we've become obsessed with the concept of organic food and products, chemical free farming, healing foods, and natural remedies. People are distrusting GMOs, pharmaceuticals, and other "non-natural" solutions. Organic products fetch a premium price. Families are turning to holistic approaches to medical issues, sometimes with fatal consequences. But what is causing people to distrust modern medicine and approaches to farming? Where does this romantic notion of "natural is better" come from? And most importantly, are these stances scientifically supportable?

Informações

- Status: Transmitido

- Data de Exibição: 16/10/2017

- Duração do Episódio: 60 minutos

- Emissora:

VisionTV

VisionTV